Breaking News

AI, deepfakes, and misinformation are already complicating the political landscape. How can the American public navigate this election season?

Today, Flam asks questions about everything from astrophysics to the reliability of medical studies, and, increasingly, the science of disinformation, with recent columns on how to spot political deepfakes and ways consumers can avoid fake news. In this conversation with Techer, Flam talks about how disinformation works and how we might be able to arm ourselves against its onslaught—just in time for the election season’s media apex.

Faye Flam: A couple of years ago I interviewed a former propagandist who’d worked for the Czechoslovakian intelligence service, which was run by the Soviets. He eventually managed to defect to the US and become a professor, and he told me that the most effective disinformation needs to be mostly true, and the false part should resonate with people’s existing beliefs. Another thing that can be convincing is the illusion that lots of other people have bought into an idea or conspiracy theory or that lots of people trust some authority figure. On Twitter, fake accounts known as bots can make it look like hundreds or thousands of people agree with posts that aren’t well supported, or that alleged experts are wildly popular.

Meta has said that they’re going to start putting labels on disinformation and Google says it’s coming for YouTube. You’ve written about some of the “bot policing” that already exists on platforms. What are the limits to tech solutions to this issue?

FF: There’s some great research on the proliferation of bots and tools that can identify them. But those who’ve been tracking bots say AI will allow bad actors to make them ever more realistic, so creators of misinformation could remain a step ahead of those trying to police it. That means social media users will have to become more skeptical.

And identifying misinformation isn’t that easy—even though people think that they know it when they see it. During the pandemic, there was so much uncertainty and scientists were learning as they went along. Some of what was labeled as misinformation was really legitimate minority opinion or involved value judgments— especially about contentious issues such as school closures or the origin of the virus.

What can we do as news consumers or media consumers to best arm ourselves against disinformation?

FF: Try to read from sources that are accountable—that have to admit when they get things wrong. And consume media from diverse sources. All the media outlets I’ve worked for are accountable. We have to write corrections if we get things wrong, and anyone making things up would be fired, just as scientists making up data could be fired for fraud. But with some of the web-based publications cropping up—some of them relying on AI to generate stories—there’s no accountability. You can say anything. So it can be confusing for people to navigate this landscape of stories as they get pulled from these different sources and spread around on social media. Someone I interviewed compared today’s media to the Turkish bazaar, where you’ve got this crazy maze of different vendors and it’s hard to even find your way out of it. Some people might be honest, and some people might be trying to cheat you.

FF: It’s all so new, but there have been a few studies on the way people perceive fake images. Essentially, they’ve found that people are not as good as they think they are at spotting deepfakes, partly because they tend to look for the opposite of what they should look for. For instance, real photographs have certain kinds of flaws in the lighting, and people are seeing these flaws and saying, huh, that’s a deepfake. But the deepfakes are often too perfect. If you step back, though, lots of technological advances have been harnessed to mislead and manipulate people—radio, television, social media, and now AI. All these advances have also helped people become better informed. Recently a group of scientists showed that chatbots did better than humans at convincing people to let go of conspiracy theories. It’s hard to know at this point whether AI is going to manipulate people in an inherently new way or whether people will adjust to it with increased skepticism, recognizing they can’t always believe what they see on their screens.

Broadly speaking, disinformation has eroded some of the public trust both in science and in journalism. What do you think those institutions have to do to get back that trust?

FF: There have been some surveys done since the start of the pandemic showing a small drop in the amount of trust people put in scientists, but those probably reflect people’s skepticism about public health. I actually think that people do trust science, but don’t always believe that our public institutions are using good science to make recommendations for us. During COVID, people never got a good scientific rationale for closed parks and beaches, outdoor mask mandates, or mandatory second and third booster shots. In some ways, scientists shouldn’t expect us to trust them unconditionally. They’re supposed to show the evidence behind their claims. We’re supposed to question them. That’s the nature of the scientific enterprise. I would say that I trust the judgment of some scientists, and that’s because they’ve earned it by offering reliable information and admitting to uncertainty or mistakes.

You have to work through all of this as a science journalist. You have to be accurate, as you mentioned. What can the general news consumer learn from how you process and verify information?

FF: That cuts to the heart of all this. Scientists and journalists in some ways do the same thing. We know that we’re not going to get the absolute truth. There’s uncertainty, there’s bias. You see a little piece of the world and the best you can do is say, here’s what I’ve learned, and here’s how I learned it. But I try to be humble about how much I really know and where things are getting speculative, and I gravitate toward interviewing scientists who are humble and realistic about the limits of their knowledge. I’m lucky I get to deal with news about science, so I don’t have to try to manipulate people into feeling outrage in order to generate interest. Instead, I can follow my own curiosity and try to stimulate curiosity in readers.

Dan Morrell is the director of engagement content at Harvard Business School and the cofounder of Dog Ear Creative. His writing has appeared in The Atlantic, The New York Times, The Boston Globe, Slate, Fast Company, and Monocle, as well as many alumni magazines.

Related Articles

-

A Legendary Hack Turns 40

In this moment of Caltech mischief that required months of planning, students Ted Williams, PhD (BS ’84) and Dan Kegel (BS ’86) manipulated the sco...

-

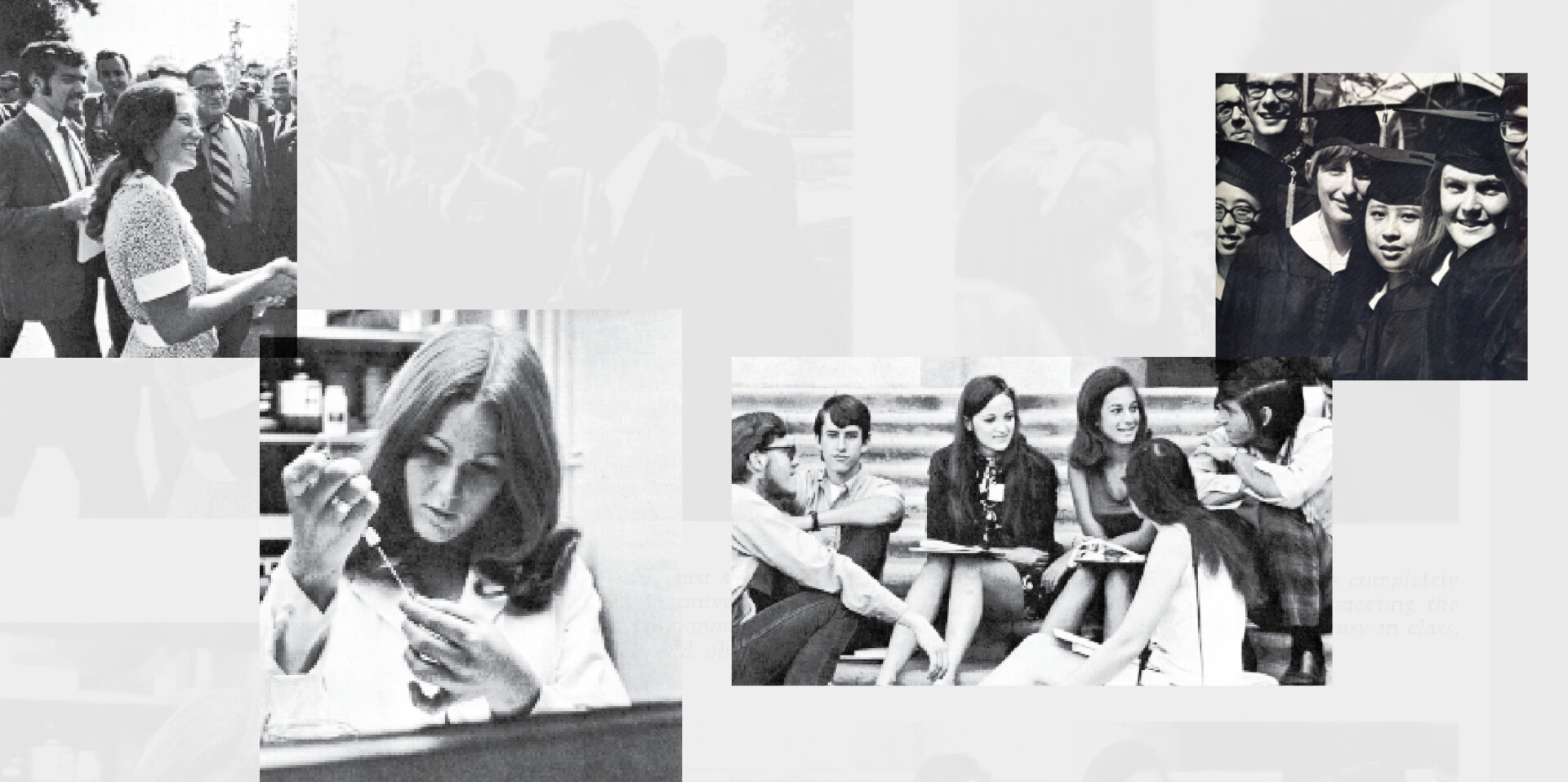

The Pioneers

On the 50th anniversary of their graduation, the first four-year female graduates reflect on their time on campus—and where they went from here.

-

All In

The key to a more sustainable future? Make sure everyone can join the hunt for solutions, says National Energy Technology Laboratory director Maria...